If 85 to 95 percent of AI projects fail to deliver the expected results, it is not because AI technology is weak. It is not due to a lack of models, GPUs, computing power, or technical skills.

One of the root cause in my opinion is usually far simpler, and far more underestimated.

Data is not structured, not accessible, not contextualized, or not reliable.

An AI model is not an oracle.

It does not create value on its own.

It can only exploit what it is given.

Without a solid foundation of relevant, organized, and governed data, AI produces fragile outcomes, high costs, and growing disappointment. And this is precisely where most organizations struggle.

AI is the cherry on top, not the cake

There is a growing tendency to treat AI as the starting point of transformation. In reality, AI should be the final layer, the cherry on top of a much deeper effort.

Before asking what AI can do, organizations should first ask:

- how data is produced and structured

- how systems communicate with each other

- how processes flow end-to-end

- how automation is designed and maintained

- how ownership and accountability are enforced

AI does not replace these fundamentals. It amplifies them.

When they are weak, AI amplifies chaos.

When they are strong, AI accelerates value.

Organizations want AI without having done the foundational Data work

For more than a decade, organizations have accumulated data without a coherent long-term strategy. Data grew organically through applications, projects, vendors, and local optimizations rather than through deliberate information design.

The result is familiar:

- fragmented databases

- non-versioned spreadsheets

- disconnected business silos

- scattered documents

- poorly integrated SaaS tools

- duplicated, inconsistent, or incomplete data

As long as data was mainly used for reporting or descriptive analytics, these weaknesses were tolerable. AI changes the rules entirely.

When organizations decide to “add AI,” they suddenly realize that their information assets are not usable as they are. Data exists, but it lacks structure, consistency, and meaning. There is no shared understanding of what is correct, current, or authoritative.

The metaphor is straightforward: trying to build a skyscraper on sand.

The technology may be impressive, but the foundations are unstable.

AI cannot structure data that you have not structured yourself

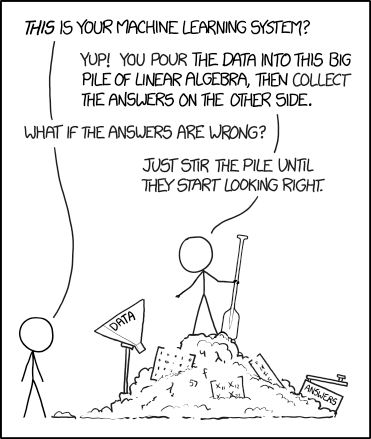

One of the most persistent misconceptions is the belief that AI will somehow clean things up, make sense of messy information, and reconstruct implicit business logic automatically.

This is an illusion.

Even the most advanced models cannot determine which data source is the single source of truth, rebuild governance from organizational chaos, invent business relationships that were never formalized, correct data without explicit rules, or understand organizational nuances that were never documented.

AI is extremely good at exploiting well-structured data.

It is extremely bad at structuring disorder that humans themselves have never clarified.

This is why so many AI initiatives fail. Organizations expect AI to compensate for weak data governance. It cannot.

System-to-System integration comes before AI

Another foundational element is often overlooked: system-to-system integration.

Before discussing AI capabilities, it is worth asking a much simpler question: can systems reliably talk to each other?

Solid, well-designed, agnostic APIs are a prerequisite for any meaningful AI strategy. If systems cannot exchange data cleanly, consistently, and predictably, AI will only amplify fragmentation.

Without strong APIs:

- automation becomes brittle

- data pipelines constantly break

- end-to-end visibility is impossible

- governance remains theoretical

In this context, discussing advanced AI orchestration, agents, or Model Context Protocols makes little sense. If the foundations are weak, adding AI-driven coordination only increases complexity without creating value.

System-to-system integration is not a detail. It is the backbone.

Automation is the real force multiplier

AI is often presented as the primary lever of efficiency. In practice, automation is the real force multiplier, and AI enhances it rather than replaces it.

Effective automation relies on clear processes, reliable data flows, deterministic system behavior, and strong integration patterns. When these elements are in place, AI can significantly improve performance by optimizing, enriching, or accelerating existing workflows.

When they are not, AI becomes a fragile layer sitting on top of broken processes.

Generative AI is most powerful when it complements automation, not when it tries to replace structure with probabilistic behavior.

The real challenge is Information Architecture, not AI Technology

If organizations want AI initiatives that deliver sustainable results, the hardest work is not choosing models or tools. It is designing a coherent information architecture.

This means clarifying data ownership, defining domain models, deciding where data lives and how it flows, enforcing quality standards, and providing context.

Data does not need to be centralized at all costs, but it does need to be coherent. Distribution without coordination is not decentralization, it is entropy.

Context is especially critical. AI is excellent at pattern matching, but it performs poorly without business meaning. Context transforms raw data into information, and information into value.

Generative AI depends even nore on structured Data

Large language models are often perceived as universal problem solvers. They are not.

Even the best models fail when documents are ambiguous, sources contradict each other, information lacks hierarchy, data is outdated, or access rules are inconsistent.

Organizations that succeed with generative AI rely on disciplined foundations: classification, assisted structuring, intelligent chunking, domain-specific models, and automated cleaning pipelines.

But there is a strict order. These mechanisms come after the initial structure is established, never before.

Without Data structuring, AI becomes an expensive illusion

This is exactly what multiple studies and field experience show. AI projects fail not because models do not work, but because data is unusable.

In other words:

AI cannot fix data problems.

It only amplifies them.

This explains why teams become disillusioned, ROI fails to materialize, costs explode, models hallucinate, trust erodes, and value never reaches production.

The AI bubble is not only financial. It is also methodological.

Conclusion: Structuring Data is step 1 for AI value

If organizations want to extract real value from AI, they must accept a simple but uncomfortable truth.

AI is not magic.

It is a machine for extracting value from data.

And without structured data, it extracts nothing.

The next wave of progress will not be driven only by better models or more GPUs. It will be driven by stronger data architectures, cleaner pipelines, clearer taxonomies, knowledge graphs, ingestion workflows, governance, and quality.

This is where differentiation will emerge.

This is where sustainable value will be built.

This is where the real AI revolution will actually begin.